03. Overview of Popular Keypoint Detectors

Overview of Popular Keypoint Detectors

ND313 C03 L03 A06 C33 Intro

Invariance to Photometric and Geometric Changes

In the literature (and in the OpenCV library), there is a large number of feature detectors (including the Harris detector), we can choose from. Depending on the type of keypoint that shall be detected and based on the properties of the images, the robustness of the respective detector with regard to both photometric and geometric transformations needs to be considered.

There are four basic transformation types we need to think about when selecting a suitable keypoint detector:

- Rotation

- Scale change

- Intensity change

- Affine transformation

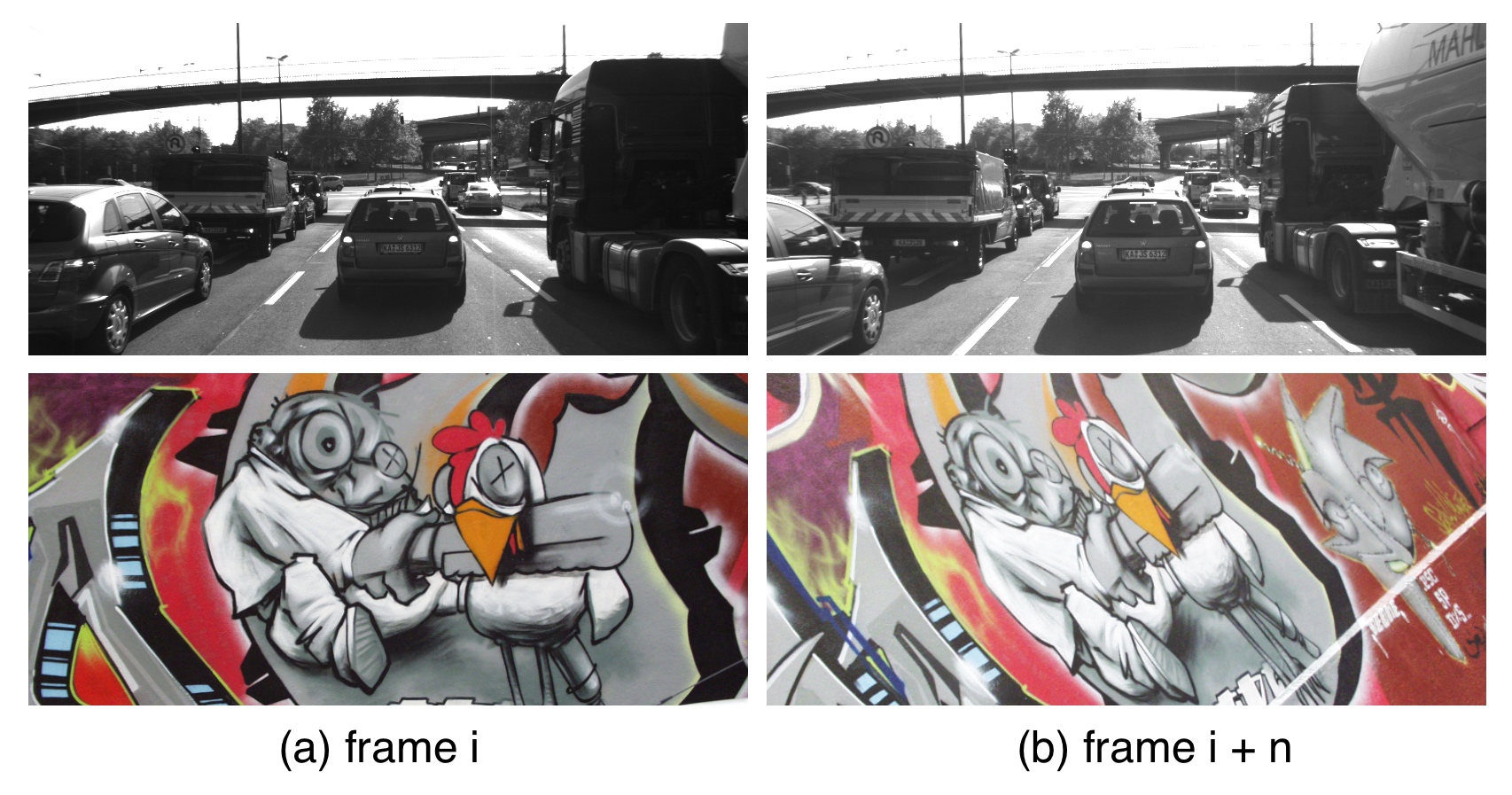

The following figure shows two images in frame i of a video sequence (a) who have been subjected to several transformations in frame i + n (b).

For the graffiti sequence, which is one of the standard image sets used in computer vision (also see http://www.robots.ox.ac.uk/~vgg/research/affine/) , we can observe all of the transformations listed above whereas for the highway sequence, when focussing on the preceding vehicle, there is only a scale change as well as an intensity change between frames i and i+n.

In the following, the above criteria are used to briefly assess the Harris corner detector.

Rotation R :

.png)

Intensity change:

.png)

Scale change:

.png)

Summarizing, the Harris detector is robust under rotation and additive intensity shifts, but sensitive to scale change, multiplicative intensity shifts (i.e. changes in contrast) and affine transformations. However, if it were possible to modify the Harris detector in a way such that it were able to account for changes of the object scale, e.g. when the preceding vehicle approaches, it might be (despite its age), a suitable detector for our purposes.

Automatic Scale Selection

In order to detect keypoints at their ideal scale, we must know (or find) their respective dimensions in the image and adapt the size of the Gaussian window w(x,y) as introduced earlier in this section. If the keypoint scale is unknown or if keypoints with varying size exist in the image, detection must be performed successively at multiple scale levels.

.png)

Based on the increment of the standard deviation between two neighboring levels, the same keypoint might be detected multiple times. This poses the problem of choosing the „correct“ scale which best represents the keypoint.

In a landmark paper from 1998, Tony Lindeberg published a method for "Feature detection with automatic scale selection". In this paper, he proposed a function F(x,y,scale) , which could be used to select those keypoints that showed a stable maximum of F over scale. The scale for which F was maximized was termed the "characteristic scale“ of the respective keypoint.

The following image shows such a function F which has been evaluated for several scale levels and exhibits a clear maximum that can be seen as the characteristic scale of the image content within the circular region.

.](img/scale-space-sunflower.jpg)

Adapted from Feature Detection with Automatic Scale Selection .

Details of how to properly design a suitable function F are not in the focus of this course however. The major take-away is the knowledge that a good detector is able to automatically select the characteristic scale of a keypoint based on structural properties of its local neighborhood. Modern keypoint detectors usually possess this ability and are thus robust under changes of the image scale.

Overview of Popular Keypoint Detectors

Keypoint detectors are a very popular research area and thus a large number of powerful algorithms have been developed over the years. Applications of keypoint detection include such things as object recognition and tracking, image matching and panoramic stitching as well as robotic mapping and 3D modeling. In addition to invariance under the transformations mentioned above, detectors can be compared for their detection performance and their processing speed.

The Harris detector along with several other "classics" belongs to a group of traditional detectors, which aim at maximizing detection accuracy. In this group, computational complexity is not a primary concern. The following list shows a number of popular classic detectors :

- 1988 Harris Corner Detector (Harris, Stephens)

- 1996 Good Features to Track (Shi, Tomasi)

- 1999 Scale Invariant Feature Transform (Lowe)

- 2006 Speeded Up Robust Features (Bay, Tuytelaars, Van Gool)

In recent years, a number of faster detectors has been developed which aims at real-time applications on smartphones and other portable devices. The following list shows the most popular detectors belonging to this group:

- 2006 Features from Accelerated Segment Test (FAST) (Rosten, Drummond)

- 2010 Binary Robust Independent Elementary Features (BRIEF) (Calonder, et al.)

- 2011 Oriented FAST and Rotated BRIEF (ORB) (Rublee et al.)

- 2011 Binary Robust Invariant Scalable Keypoints (BRISK) (Leutenegger, Chli, Siegwart)

- 2012 Fast Retina Keypoint (FREAK) (Alahi, Ortiz, Vandergheynst)

- 2012 KAZE (Alcantarilla, Bartoli, Davidson)

In this course, we will be using the Harris detector as well as the Shi-Tomasi detector (which is very similar to Harris) as representatives from the first group of "classic“ detectors. From the second group, we will be leveraging the OpenCV to implement the entire list of detectors.

ND313 C03 L03 A07 C33 Outro

ND313 C03 L03 A07 C33 Outro Pt 2

Exercise

Before we go into details on the above-mentioned detectors in the next section, use the OpenCV library to add the FAST detector in addition to the already implemented Shi-Tomasi detector and compare both algorithms with regard to (a) number of keypoints, (b) distribution of keypoints over the image and (c) processing speed. Describe your observations with a special focus on the preceding vehicle.

Workspace

This section contains either a workspace (it can be a Jupyter Notebook workspace or an online code editor work space, etc.) and it cannot be automatically downloaded to be generated here. Please access the classroom with your account and manually download the workspace to your local machine. Note that for some courses, Udacity upload the workspace files onto https://github.com/udacity , so you may be able to download them there.

Workspace Information:

- Default file path:

- Workspace type: react

- Opened files (when workspace is loaded): n/a

-

userCode:

export CXX=g++-7

export CXXFLAGS=-std=c++17

Reflect

QUESTION:

Describe your observations from the exercise above with a special focus on the preceding vehicle.

ANSWER:

Thank you for your reflection!